Hello Assistant!

2024 / 01 / 21

Background

A couple of months ago OpenAI held their first keynote presentation and announced a number of new products. The one that really captured my imagination was the new Assistant Playground and corresponding API changes.

While "Assistants" showed up early on when OpenAI released their GPT 3.5 API, they were available through 3rd party API libraries, such as Huggingface and Longchain. Not that I'm against these tools, quite the opposite, I simply never had the time to delve deeper into them. In fact, considering the rapid changes that are happening in this space, it's probably a good idea to use an API library. For example, the recent GPT itinerary that I wrote about is already using a deprecated version of the OpenAI chat API.

So What?

What really are "Assistants" in the first place? Despite common misconceptions, they’re a lot more than a chat bot. Assistants can be seen as fully autonomous entities, which if given a set of tools and a goal, will select the best course of action to accomplish the task at hand. They are extremely capable entities, which can accomplish a huge range of goals in the digital domain (so far), as long as they are given the correct tools and enough context.

I wanted to try out the new API and in the process "day dream" about some of the possibilities of having an Assistant for a specific set of tasks.

Task Objective

Just like the GPT itinerary mini-project, I really didn't want to spend more than a couple of hours. I was mainly interested in how the API works and how functions can be executed by the assistant. In summary, I wanted to accomplish the following:

1.Create a simple Assistant programmatically

2.Register a "Hello World" function

3.Instruct the Assistant to call the function when prompted

4.Send the function output back to the Assistant and read its response

Creating an Assistant

The first thing to do is to download and install the OpenAPI tools, they have a great "Quick Start" page - [Click].

To create an Assistant, you need to simply call the "create" method and specify the following criteria:

1. Instructions - the role of the assistant

2. Name

3. Tools - this is the interesting bit. Currently Assistants support three types of tools, code interpreter, knowledge retrieval and function calling.

A quick aside on each of the tools, because they are all great. Code Interpreter, allows the Assistant to understand and execute Python code in a sandbox environment. Knowledge Retrieval is more than simply uploading a file that the Assistant scans through. After the file is uploaded it's automatically indexed and vectorised for more context accurate responses to queries.

Here's an example of what creating my "Hello Assistant" looks like:

assistant = client.beta.assistants.create(

instructions="You are my first OpenAI Assistant! When prompted, please read the function output and add an encouraging message to the user.",

name="Hello Assistant",

tools=[{

"type": "function",

"function": {

"name": "hello_world",

"description": "A greeting from the Assistant to the World!",

"parameters": {

"type": "object",

"properties": {}

}

}

}

],

model="gpt-4-1106-preview",

)

Note the tools section, where the "hello_world" function is registered. This gives the Assistant an understanding of the input and output parameters of the function, along with a brief description of what it does. These are important steps, which when defined well, allow the Assistant to select the most appropriate tool for the given query.

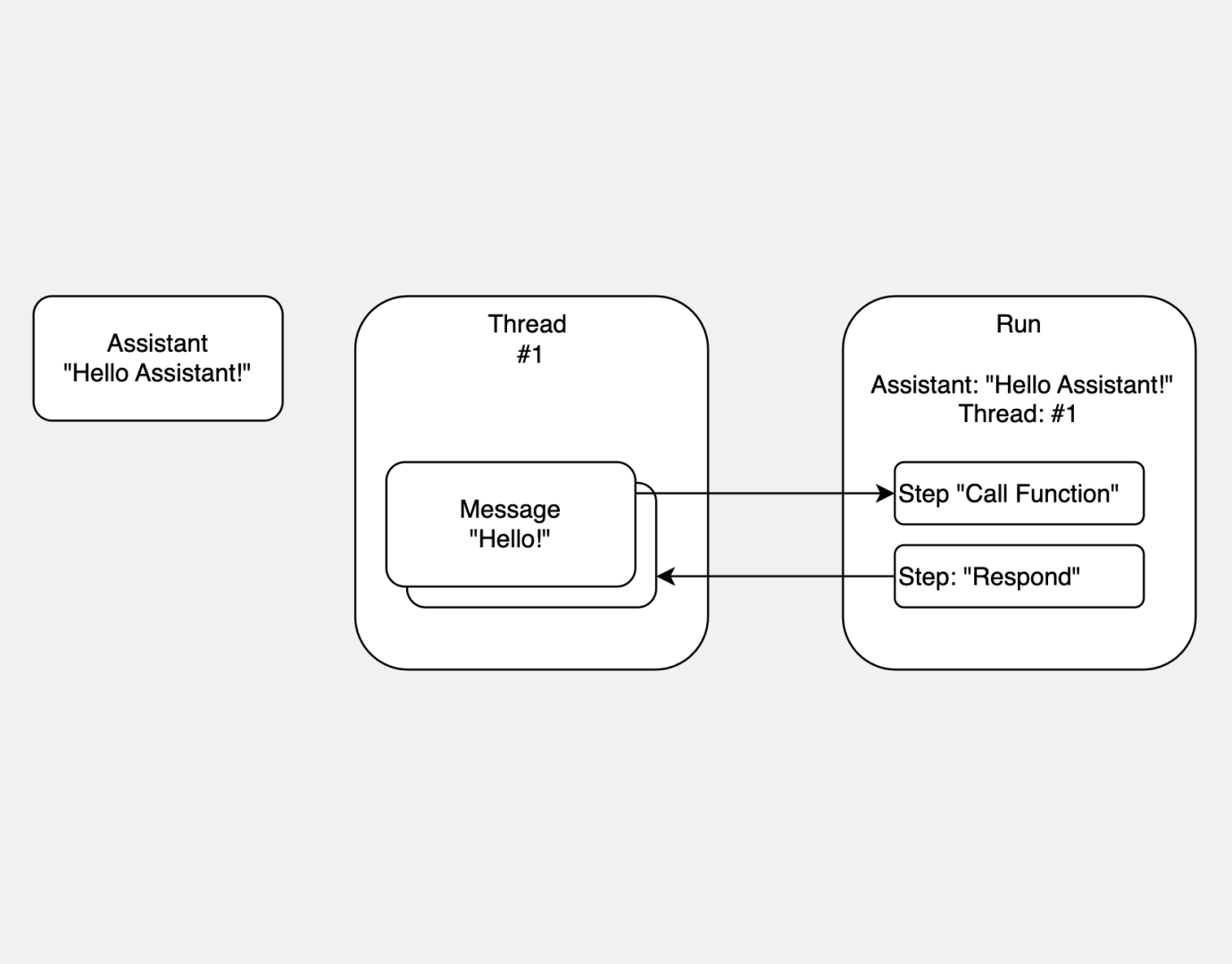

Once created, the API returns a unique ID, which can later be used for sending queries, or rather for posting messages. The new change added to the API is that clients no longer need to keep track of the conversation history and send it as context to the Assistant. The context is kept in a separate "Thread", hosted by OpenAI, where messages are posted in. The main change to the Assistant API is that Assistants are independent of threads and messages. The cool bit about this new addition is that threads and messages can be created independently of the Assistant and posted to multiple ones. Each separate thread is executed against an assistant. This allows multiple clients to be served by the same Assistant, with responses that are separate from each other.

A high-level overview of the relationship between Assistants, Threads, Messages and Runs.

Another addition is the concept of a "Run". Messages sent to the Assistant go through a Run cycle, where the Assistant determines which tool to use to best respond to the query. I'll go through more details about the Run cycle below.

Creating a Message

Before asking the Assistant to execute a function, I had to add the "hello_world" function to my Python app, along with a "wait_on_run" function, which makes reading the run status a bit easier. Here's the code:

import time

# The function to call

def hello_world():

return 'Hello Assistant!'

# Wait for the Assistant to submit a tool request

def wait_on_run(run, thread):

while run.status == "queued" or run.status == "in_progress":

run = client.beta.threads.runs.retrieve(

thread_id=thread.id,

run_id=run.id,

)

time.sleep(1.0)

return run

Next, it's time to create a new Thread and post the first message in it. Creating a new Thread is straight-forward:

# Requires OPENAI_API_KEY added to local environment

client = OpenAI()

# Create a new Thread to post a Message in

thread = client.beta.threads.create()

# Create a Message

message = client.beta.threads.messages.create(

thread_id=thread.id,

content="Can you please run the function provided and read out the output, followed by an encouraging message?",

role='user'

)

This code creates a Thread and posts a message to it. To send the message to the Assistant a new Run cycle needs to be created, like so:

# Submit a message via a Run operation

run = client.beta.threads.runs.create(

thread_id=thread.id,

assistant_id='asst_id'

)

With a new Run cycle created, the Thread, along with the first message is sent to the Assistant for processing.

The Run Cycle

Once a Run cycle is created, the Assistant will attempt to process the request and use the best tool for the job. If the tool is a function, the Assistant will select the function it thinks would best solve the query. If the function it selects has input parameters, the Assistant will provide them. In my case, the "hello_world" function takes no input parameters, so the Assistant will simply call the function. Since the function resides in my Python application, the Assistant will halt the run cycle and wait for the function run and then receive its output in the form of a run update. This run cycle looks like this:

# Wait for the Run operation to return a status "requires_action"

run = wait_on_run(run, thread)

tool_call = run.required_action.submit_tool_outputs.tool_calls[0]

function_to_call = tool_call.function.name

# Not a glamorous way to handle this

if function_to_call == 'hello_world':

response = hello_world()

# Submit result to Assistant

run = client.beta.threads.runs.submit_tool_outputs(

run_id=run.id,

thread_id=thread.id,

tool_outputs=[{

'tool_call_id': tool_call.id,

'output': response,

}],

)

The crucial part here is that the Python application executes the function selected by the Assistant and then provides the response as an update to the run cycle. What remains now is the wait for the Assistant to read the function response and post his response as a new message. To read the full thread of messages, I used the following:

# Wait for the final Run to complete

run = wait_on_run(run, thread)

# Check final response from Assistant

print(thread.messages.list())

And here's the response:

"Hello there! Great to see you here. Keep up the great spirit!"

Conclusion

That's it. From creating a new Assistant to executing a simple "hello_world" function, it only a few lines of code. This opens up a lot of exciting opportunities. Given enough context, Assistants can not only understand the problem they need to solve, but intelligently select the best tool for the job. Given the interconnected nature of our modern world, there are tons of opportunities where Assistants can help to streamline some aspects of our work. We're only scratching the surface of what Assistants can do for us, but the future is definitely looking very interesting!